Understanding Apache Airflow: A Beginner’s Guide to Core Concepts

Hi, I am coder2j.

In this airflow tutorial, we’ll delve into the core concepts of Apache Airflow.

By the end of this guide, you’ll have a solid grasp of key terms like DAG, DAG Run, Task, Operator, Task Instance, and execution_date.

If you are a video person, check out the YouTube video.

Let’s dive right in!

Getting to Know Apache Airflow #

If you don’t know about Apache Airflow yet, please check out our Airflow Introduction post to have a detailed understanding.

Let’s quickly recap what Apache Airflow is and where it came from.

- Initially developed by Airbnb in 2014 as an internal tool, Airflow became an open-source project in 2016 and later achieved Top-Level Apache Software Foundation status in 2019.

- It’s a powerful workflow management platform and the best part? It’s written in Python.

Understanding Workflow: The DAG #

Now, what is a workflow?

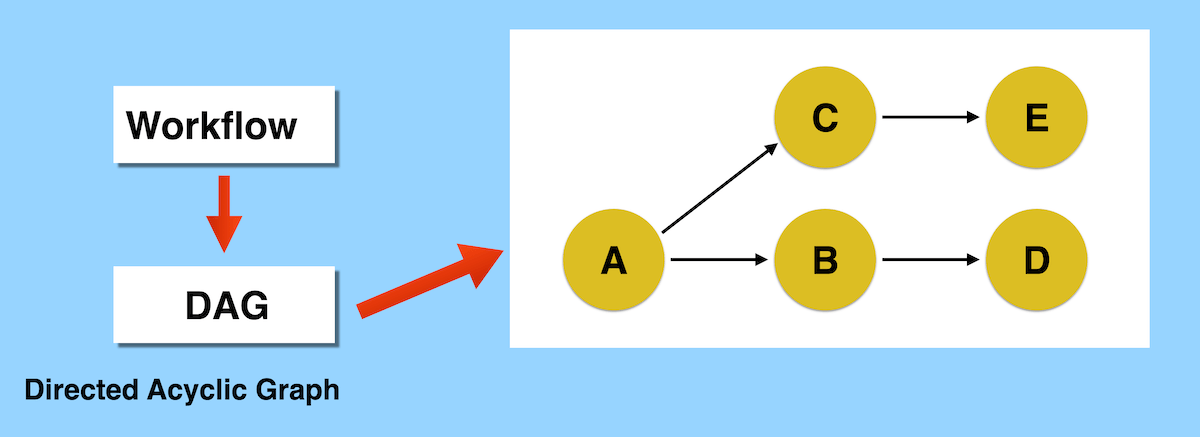

In Airflow, a workflow is defined as a DAG, which stands for directed acyclic graph. Imagine it as a sequence of tasks that need to be executed.

For example, you might have tasks A, B, C, D, and E, where A precedes B and C, and D and E follow the completion of B and C.

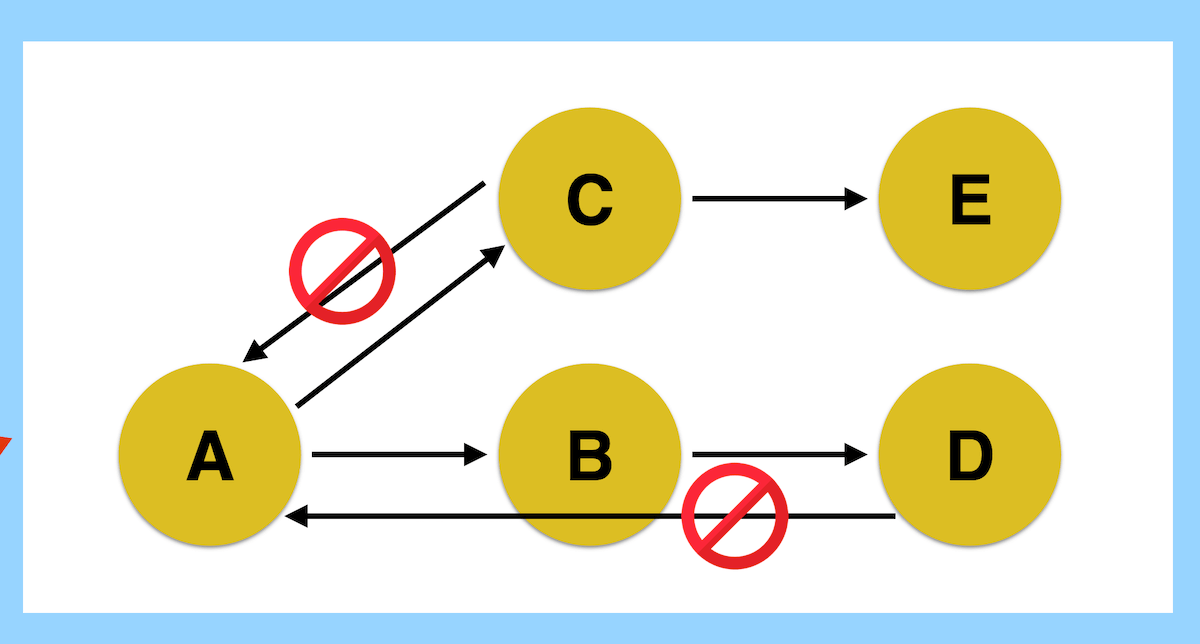

However, when Task D finishes, it’s not allowed to run Task A again, since it creates a cycle. Likewise, Task C can’t be followed back by Task A.

So, the DAG is a collection of tasks organized to reflect their relationships and dependencies, ensuring a smooth and logical execution flow.

Decoding Task and Operator #

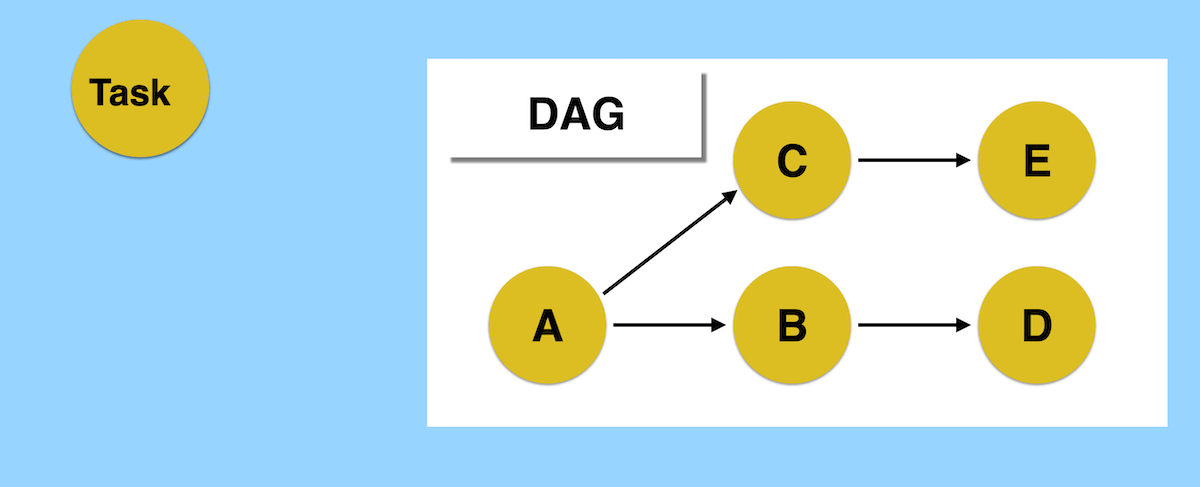

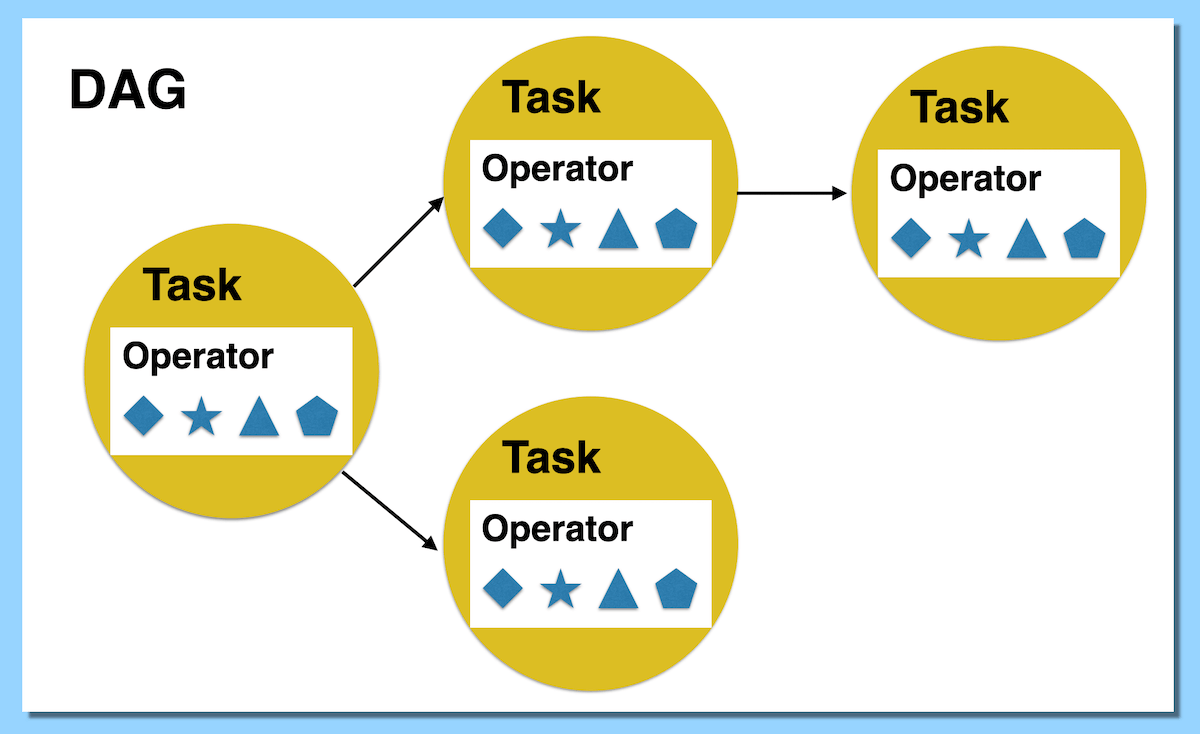

In the Airflow universe, a Task represents a unit of work within a DAG. Each task is like a node in the DAG graph and is written in Python. Tasks have dependencies; for instance, task C is downstream of Task A, and task C is upstream of task E.

The key to understanding what a task does lies in its Operator. Operators determine the actual actions a task will perform. Airflow provides various built-in operators, such as BashOperator and PythonOperator, and you can even craft your own custom operator. Each task implements an operator by defining specific values for that operator, allowing for a diverse range of actions.

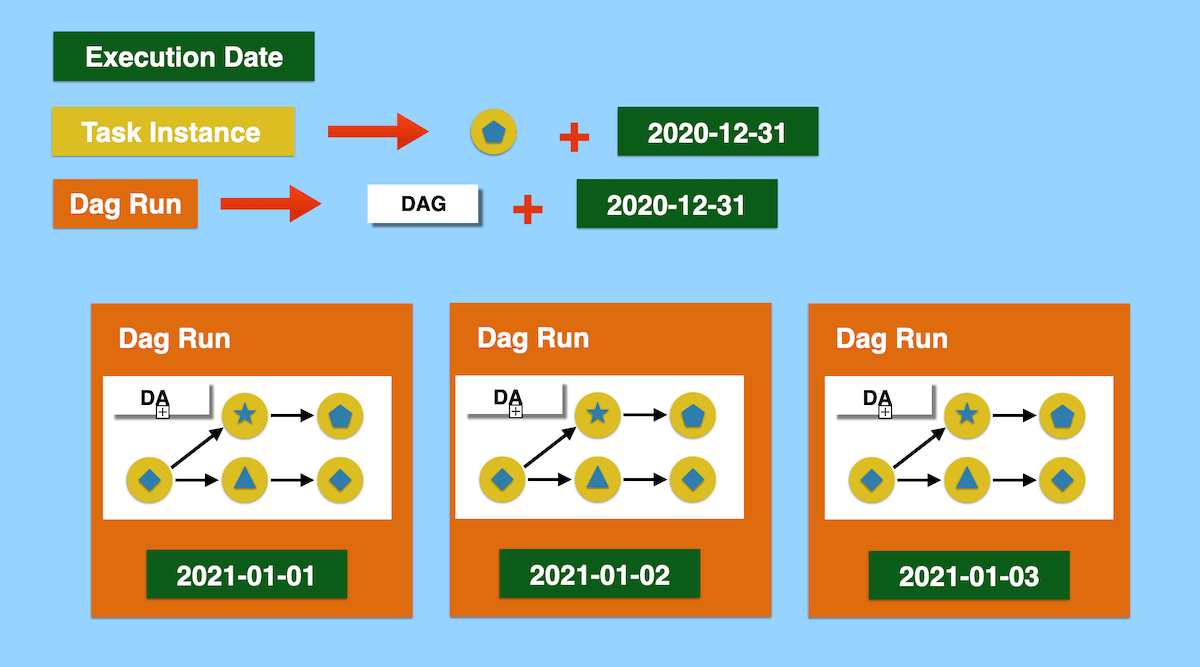

Execution Date, DAG Run, and Task Instance #

Let’s demystify three critical concepts: execution_date, DAG run, and task instance.

-

Execution Date: This is the logical date and time when the DAG run and its task instances are executed. For example, you might have DAG runs in progress for specific dates like 2021-01-01, 2021-01-02, and 2021-01-03.

-

Task Instance: It’s a run of a task at a specific point in time, denoted by the execution_date.

-

DAG Run: An instantiation of a DAG, containing task instances that run for a specific execution_date.

That’s it! You’ve now navigated through the fundamental concepts of Apache Airflow. These insights into DAGs, tasks, operators, and related terms form the bedrock of effective workflow management.

Now, it’s your turn. Do I cover every Airflow core concept you need to know?

Let me know if you face any issues or any suggestions in the comment below.

Related Posts

Comments: